Ollama - Self-Hosted Your AI Chat

Ollama

Get up and running with large language models, locally.\

Get up and running with large language models, locally.\

Prerequisite

- Docker

- Git

Installation

git clone ollama webui

1

2

git clone https://github.com/ollama-webui/ollama-webui.git

cd ollama-webui

edit docker-compose.yml, I am running with my nvidia GPU, so please uncomment gpu part

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

version: '3.6'

services:

ollama:

# Uncomment below for GPU support

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities:

- gpu

volumes:

- ollama:/root/.ollama

# Uncomment below to expose Ollama API outside the container stack

# ports:

# - 11434:11434

container_name: ollama

pull_policy: always

tty: true

restart: unless-stopped

image: ollama/ollama:latest

ollama-webui:

build:

context: .

args:

OLLAMA_API_BASE_URL: '/ollama/api'

dockerfile: Dockerfile

image: ollama-webui:latest

container_name: ollama-webui

depends_on:

- ollama

ports:

- 3000:8080

environment:

- "OLLAMA_API_BASE_URL=http://ollama:11434/api"

extra_hosts:

- host.docker.internal:host-gateway

restart: unless-stopped

volumes:

ollama: {}

run docker-compose

1

docker-compose up -d --build

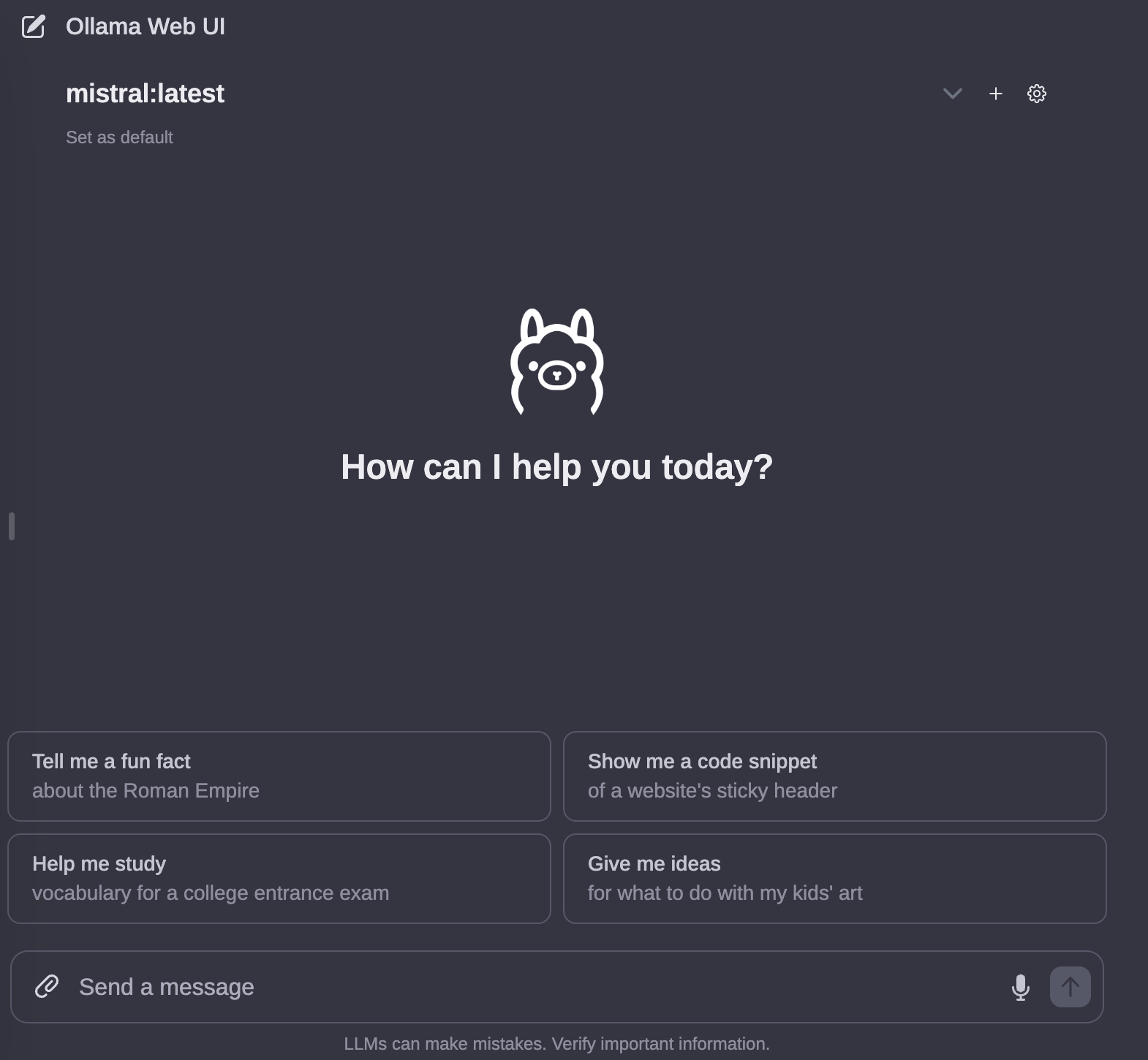

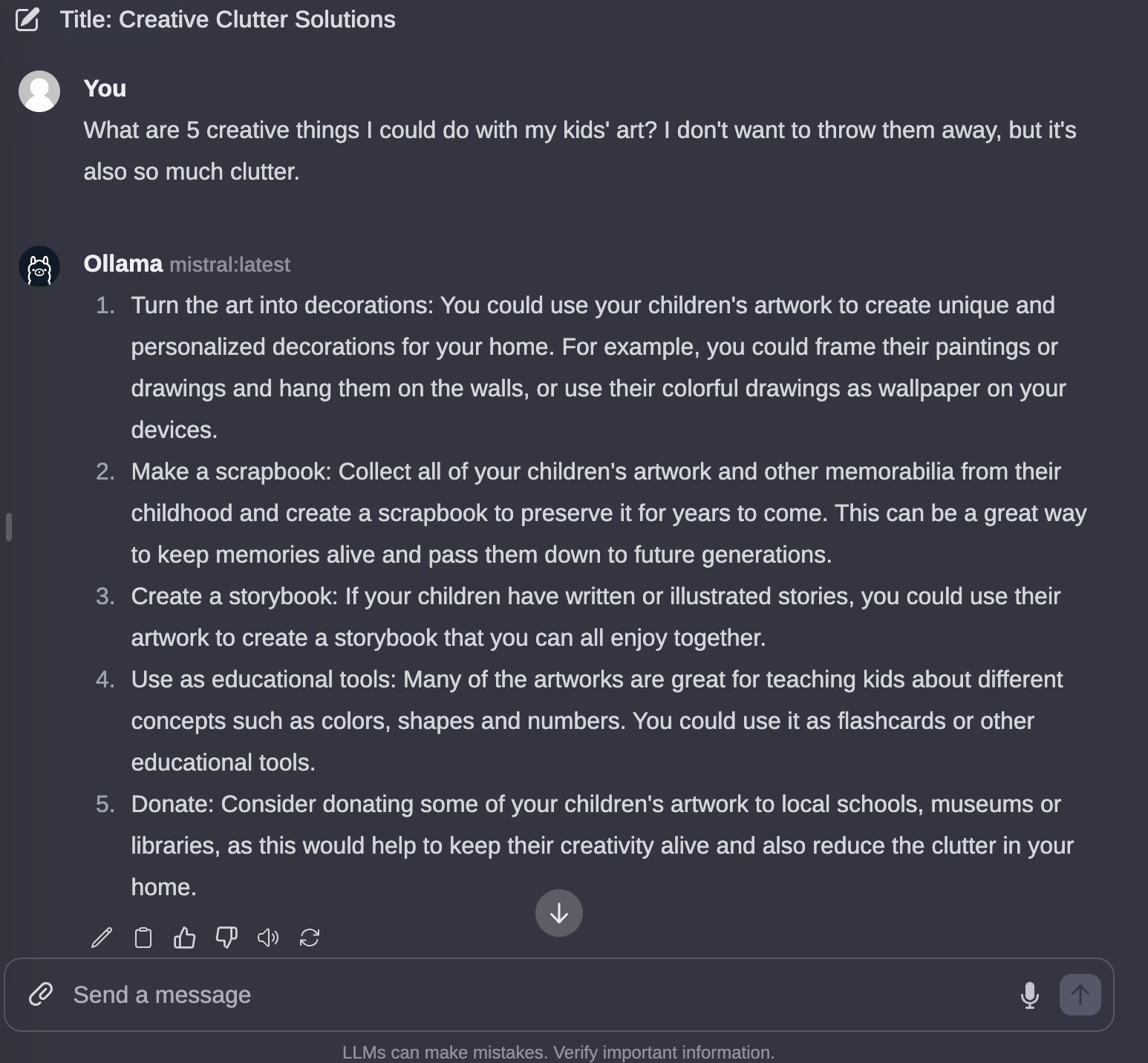

WebUI

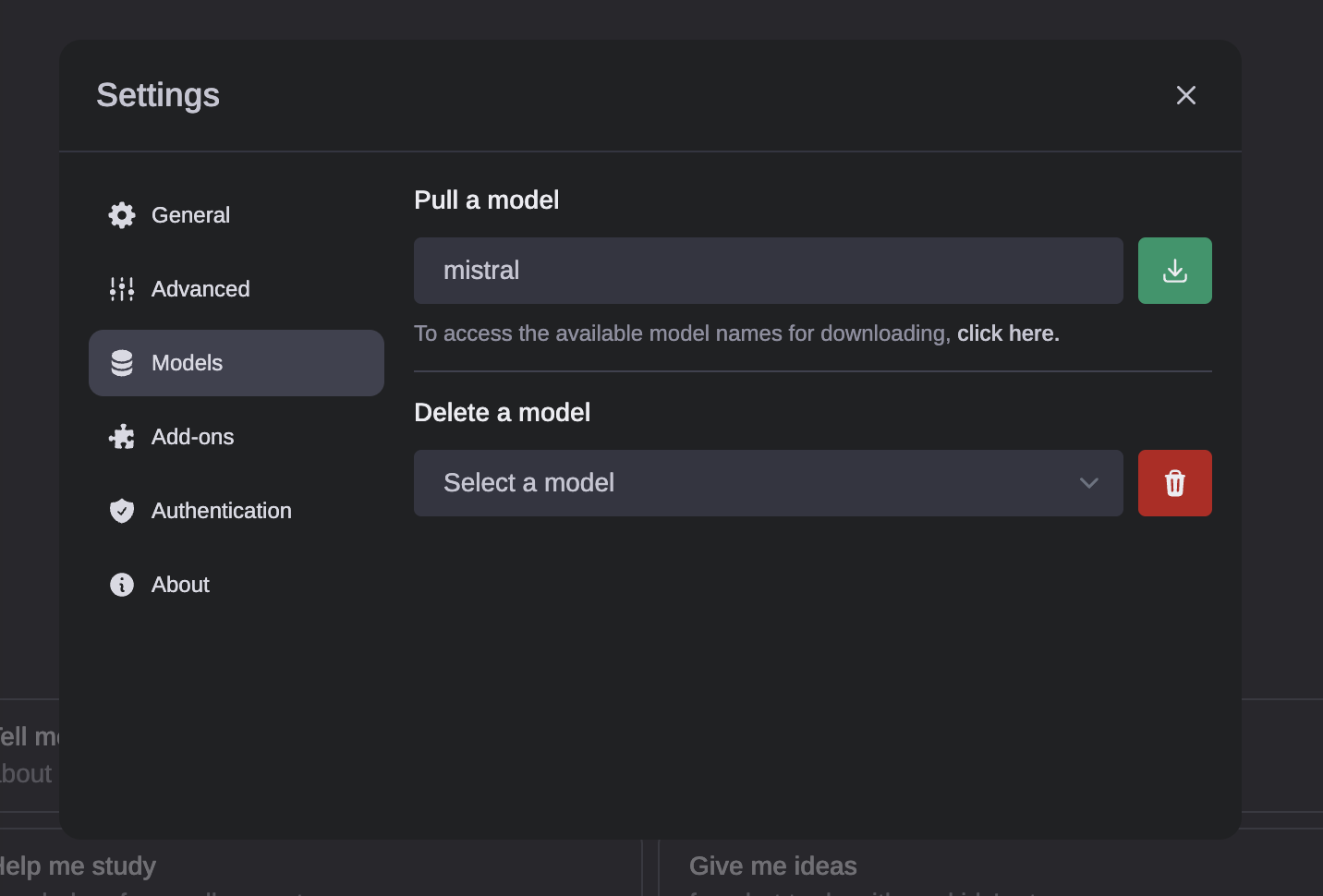

go setting download a model

ps. please take a look hardware requirement with the LLM.

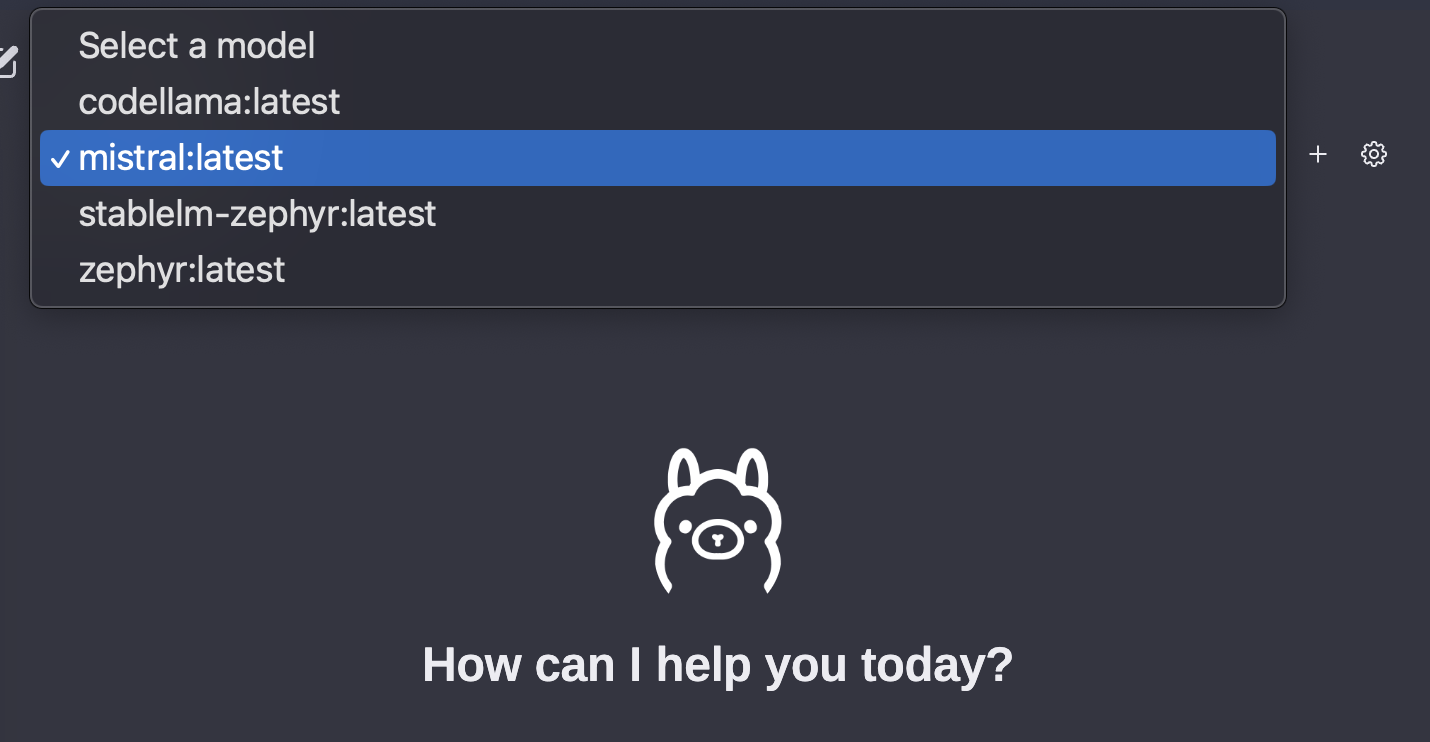

select with you just downloaded model.

References

https://github.com/ollama-webui/ollama-webui

Additional

You can expose ollama api for Litellm API integration. I’m learning this, and will share it later.

This post is licensed under CC BY 4.0 by the author.