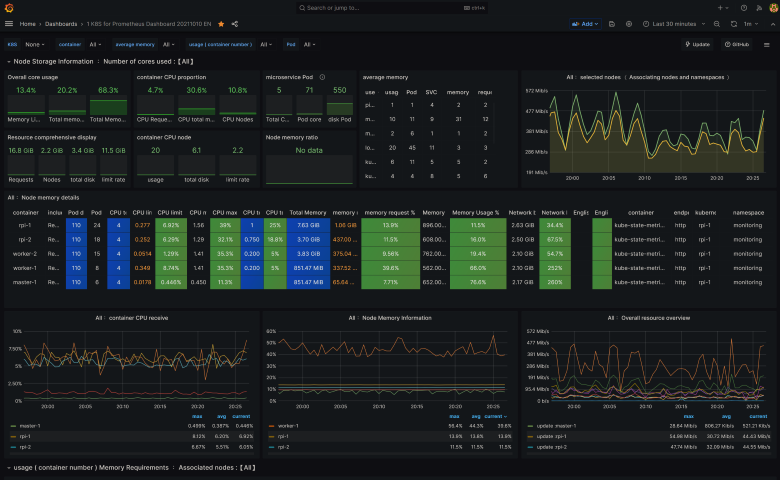

Monitor Your Kubernetes with Grafana & Prometheus

Prometheus is a monitoring solution for storing time series data like metrics. Grafana allows to visualize the data stored in Prometheus (and other sources). This sample demonstrates how to capture NServiceBus metrics, storing these in Prometheus and visualizing these metrics using Grafana.

Prerequisites

Install helm

1

2

3

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

helm will be using to install kube-prometheus-stack

Helm

Add helm repo

1

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

Create a secret file for login info

1

2

echo -n 'admin' > ./admin-user

echo -n 'P@ssw0rd' > ./admin-password

Create a monitoring namespace

1

kubectl create ns monitoring

Create a secret for grafana-admin-credentials

1

kubectl create secret generic grafana-admin-credentials --from-file=./admin-user --from-file=admin-password -n monitoring

Remove the secret files

1

rm admin-user && rm admin-password

Values file

Create a values.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

fullnameOverride: prometheus

defaultRules:

create: true

rules:

alertmanager: true

etcd: true

configReloaders: true

general: true

k8s: true

kubeApiserverAvailability: true

kubeApiserverBurnrate: true

kubeApiserverHistogram: true

kubeApiserverSlos: true

kubelet: true

kubeProxy: true

kubePrometheusGeneral: true

kubePrometheusNodeRecording: true

kubernetesApps: true

kubernetesResources: true

kubernetesStorage: true

kubernetesSystem: true

kubeScheduler: true

kubeStateMetrics: true

network: true

node: true

nodeExporterAlerting: true

nodeExporterRecording: true

prometheus: true

prometheusOperator: true

alertmanager:

fullnameOverride: alertmanager

enabled: true

ingress:

enabled: false

grafana:

enabled: true

fullnameOverride: grafana

forceDeployDatasources: false

forceDeployDashboards: false

defaultDashboardsEnabled: true

defaultDashboardsTimezone: Asia/Hong_Kong

service:

type: LoadBalancer

serviceMonitor:

enabled: true

admin:

existingSecret: grafana-admin-credentials

userKey: admin-user

passwordKey: admin-password

kubeApiServer:

enabled: true

kubelet:

enabled: true

serviceMonitor:

metricRelabelings:

- action: replace

sourceLabels:

- node

targetLabel: instance

kubeControllerManager:

enabled: true

endpoints: # ips of servers

- 10.0.50.101

- 10.0.50.102

- 10.0.50.103

- 10.0.50.104

- 10.0.50.105

coreDns:

enabled: true

kubeDns:

enabled: false

kubeEtcd:

enabled: true

endpoints: # ips of servers

- 10.0.50.101

- 10.0.50.102

- 10.0.50.103

- 10.0.50.104

- 10.0.50.105

service:

enabled: true

port: 2381

targetPort: 2381

kubeScheduler:

enabled: true

endpoints: # ips of servers

- 10.0.50.101

- 10.0.50.102

- 10.0.50.103

- 10.0.50.104

- 10.0.50.105

kubeProxy:

enabled: true

endpoints: # ips of servers

- 10.0.50.101

- 10.0.50.102

- 10.0.50.103

- 10.0.50.104

- 10.0.50.105

kubeStateMetrics:

enabled: true

kube-state-metrics:

fullnameOverride: kube-state-metrics

selfMonitor:

enabled: true

prometheus:

monitor:

enabled: true

relabelings:

- action: replace

regex: (.*)

replacement: $1

sourceLabels:

- __meta_kubernetes_pod_node_name

targetLabel: kubernetes_node

nodeExporter:

enabled: true

serviceMonitor:

relabelings:

- action: replace

regex: (.*)

replacement: $1

sourceLabels:

- __meta_kubernetes_pod_node_name

targetLabel: kubernetes_node

prometheus-node-exporter:

fullnameOverride: node-exporter

podLabels:

jobLabel: node-exporter

extraArgs:

- --collector.filesystem.mount-points-exclude=^/(dev|proc|sys|var/lib/docker/.+|var/lib/kubelet/.+)($|/)

- --collector.filesystem.fs-types-exclude=^(autofs|binfmt_misc|bpf|cgroup2?|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|iso9660|mqueue|nsfs|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|selinuxfs|squashfs|sysfs|tracefs)$

service:

portName: http-metrics

prometheus:

monitor:

enabled: true

relabelings:

- action: replace

regex: (.*)

replacement: $1

sourceLabels:

- __meta_kubernetes_pod_node_name

targetLabel: kubernetes_node

resources:

requests:

memory: 512Mi

cpu: 250m

limits:

memory: 2048Mi

prometheusOperator:

enabled: true

prometheusConfigReloader:

resources:

requests:

cpu: 200m

memory: 50Mi

limits:

memory: 100Mi

prometheus:

enabled: true

prometheusSpec:

replicas: 1

replicaExternalLabelName: "replica"

ruleSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

podMonitorSelectorNilUsesHelmValues: false

probeSelectorNilUsesHelmValues: false

retention: 1d

enableAdminAPI: true

walCompression: true

additionalScrapeConfigs: |

- job_name: 'my-server'

metrics_path: /metrics

static_configs:

- targets: ['10.0.50.100:9100']

storageSpec:

volumeClaimTemplate:

metadata:

name: prometheus-prometheus-prometheus-db

spec:

storageClassName: longhorn

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 5Gi

thanosRuler:

enabled: false

Install

Use helm install kube-prometheus-stack

1

helm install -n monitoring prometheus prometheus-community/kube-prometheus-stack -f values.yaml

Grafana

Access Grafana web-ui

1

k get svc -n monitoring

1

2

3

4

5

6

7

8

prometheus-prometheus ClusterIP 10.43.13.18 <none> 9090/TCP 6d5h

node-exporter ClusterIP 10.43.187.199 <none> 9100/TCP 6d5h

kube-state-metrics ClusterIP 10.43.66.196 <none> 8080/TCP,8081/TCP 6d5h

prometheus-operator ClusterIP 10.43.194.4 <none> 443/TCP 6d5h

grafana LoadBalancer 10.43.189.189 10.0.50.201 80:31748/TCP 6d5h

prometheus-alertmanager ClusterIP 10.43.251.198 <none> 9093/TCP 6d5h

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 6d5h

prometheus-operated ClusterIP None <none> 9090/TCP 6d5h

Dashboard

Import your dashboard

Go to Link to search

I find a pretty good dashboard for k8s cluster - Link

Update

You can update and change your values.yml

1

helm upgrade -n monitoring prometheus prometheus-community/kube-prometheus-stack -f values.yaml

This post is licensed under CC BY 4.0 by the author.